Having access to Microsoft Azure Copilot has been really interesting. On one hand, the use cases are almost limitless, essentially a choice of what do you want to try do with it? On the other, there is still work to be done to maximise its potential (acknowledge by Microsoft throughout use in fairness).

Working with any of the ‘Copilots’, one important element for me is to get a grounded understanding of what it is capable of, based on something I am an expert on. I cannot tell how good it is if I am asking it help with something I don’t know arguably better than it does. So – let’s I decided to push it with a Virtual Network.

My objective when starting this post was to hopefully reach the point where one single, detailed prompt would spit out an acceptable VNET design statement, perhaps even the code to build it, but that part was less important to me right now. Anyone can create a good Azure design right? 🙂

I am first going to outlay my thinking with respect to a VNET, it’s purpose, my security posture, connectivity requirements, and likely workloads. Rewording this into a statement that is aligned to the Cloud Adoption Framework, and Azure Network Architecture details.

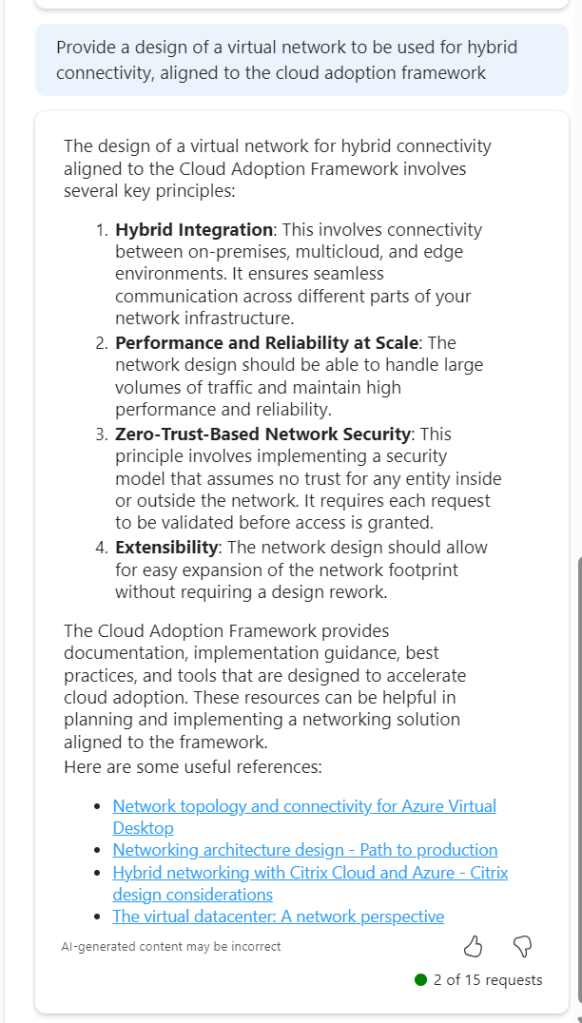

To get a baseline of a basic prompt, I started with the below. I believe this helps work towards the ‘best’ prompt.

So this jumps all over the place. We have perimeter references, AVS and App Gateway all mentioned. Not ideal. But I did ask for an example, and it does provide links. So let’s tighten our prompt.

This is much better, proper sequential statements, however that third link to hybrid with Citrix is irrelevant. Now, as Copilot functions in a chat method, let’s use this prompt and response to expand detail.

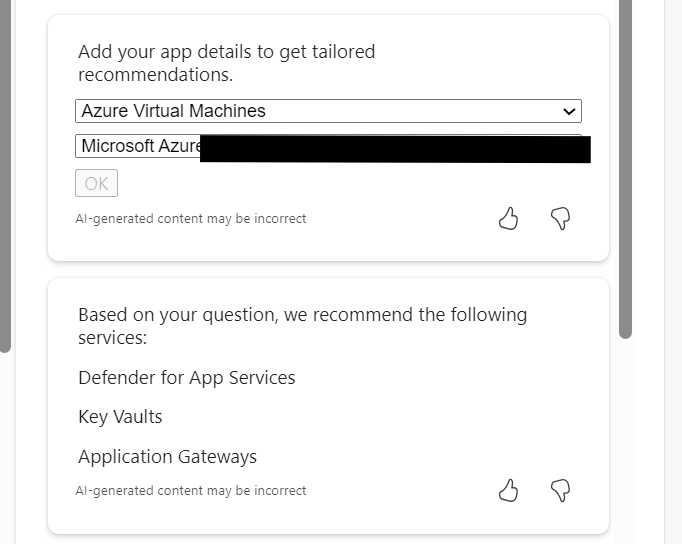

So this approach doesn’t work. When you select the (perhaps) relevant items, the output is not aligned to the original ask.

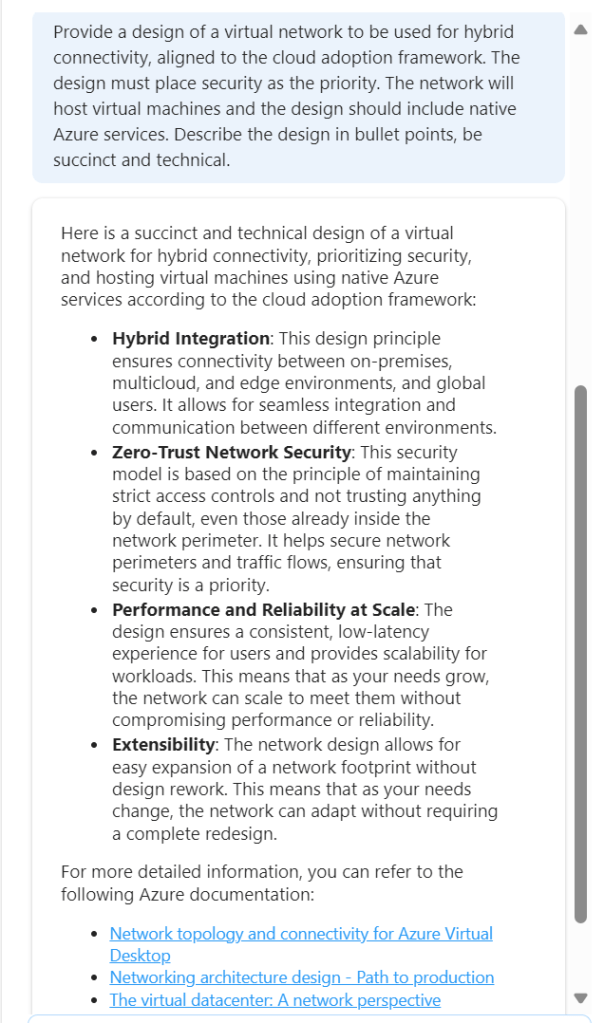

So – let’s try this another way. We know the first recommend prompt returned good results. Rather than continue in a chat-response format, let’s try one very specific prompt. To ensure no confusion – I started a new chat for this.

This is better, but to be honest – I am not looking for design principles like ‘zero trust’. So we need to adjust the wording. Again, I have started a new chat for this.

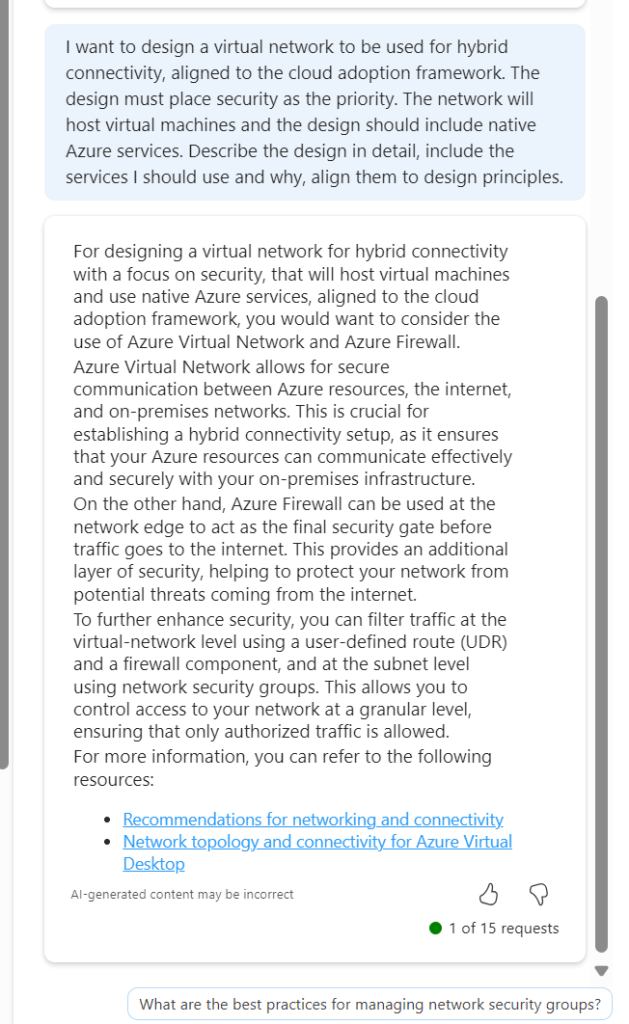

Now we are getting somewhere. If this had included Bastion I would have ranked it 9/10. The first link is good, second link is not so this scores a 7/10 for me. It is a great improvement on previous asks, and I am trying to ask as few leading questions as possible. I tried another following response to get some more detail

Again, the general detail is good, but the links are hit and miss. This could introduce some confusion. I tried another follow on from this, but again it went a different route based on my existing subscription services.

Rather than say this didn’t work, I think I have set out with a task that isn’t really achievable at present. There are so many elements that require consideration, some sequential, some overlapping, some interdependent, that a single chat response is going to be very difficult if not impossible. At the same time, repeat responses are also challenging, especially when you’re not looking for something relevant to what you currently have, but aligned to best practice.

Overall, I think Copilot for Azure is improving every month, and the use cases are constantly expanding. However, I don’t believe, based on current functionality that it will be able to fully assist with design guidance and decisions, beyond providing principles and guided links. For the real design work – you will still need an expert 😉