Update management is a necessary evil in the IT world. Some admins enjoy “Patch Tuesday” and for some it’s the most dreaded day of the month. Microsoft have made strides in relieving the stress that can be associated with patching certain core VMs but good management still requires a lot of administration.

Within Azure, every time you deploy a Windows server VM from a Marketplace image, you are getting the latest available patches, but what options do you have should those VMs need to run for an extended period of time? How do you keep them patched so they adhere to company security policies?

Traditionally linking Azure to your existing on-premise solution, or building a WSUS or SCCM implementation were options. Both of these obviously work well but for smaller sites could be considered cumbersome. Now, within Azure itself, making use of some platform objects that you may already be using, you can get a central console view of all of your machine updates.

The two requirements, outside of a VM to manage, are:

- Automation Account

- Log Analytics Workspace

Both of these implementations are basically free, (see latest pricing details for limits etc.) and relatively easy to set up separately. However, part of the process for enabling update management can also set these up for you should that be required.

To enable update management for a single VM, open the VM blade and choose the Update Management button from the left action menu, it is part of the Operations section. This will run a validation operation to see if the feature is enabled and assess whether there are automation accounts and log analytics workspaces available. The validation process also checks to see if the VM is provisioned with the Microsoft Monitoring Agent (MMA) and Automation hybrid runbook worker. This agent is used to communicate with the VM and obtain information about the update status. This information is stored in the log analytics workspace.

Once you choose your current available options, or request to have new ones created, the solution takes roughly 15 minutes to enable. Once enabled, you will now see a management page, it will take some time for the live data to be collected from the server, but once that completes, this page will display information regarding the status of updates available/missing. You can click on individual updates for more information. You can also analyse the log search queries that run for checking updates, these can be modified to suit your environment if/as required.

Now that your management pane is displaying what updates are missing, you need to install them. You can schedule the installation of the updates you require from the same management pane. To install updates, schedule a deployment that follows your release schedule and service window. You can choose which update types to include in the deployment. For example, you can include critical or security updates and exclude update rollups. One thing to note that is important, if an update requires a restart the VM will reboot automatically.

The scheduling process is very simple. You choose a name for the deployment, the classification of updates you would like to install, your scheduled time to begin the process of installation and finally a maintenance window to ensure compliance with your defined service windows.

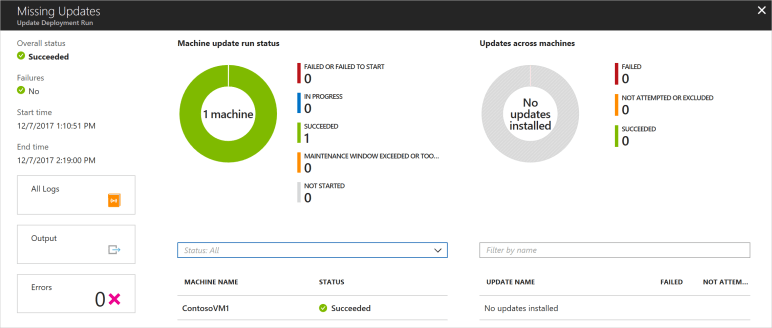

Once the scheduled deployment runs, you can then view its status. Again, this is via the Update Managment blade. This reports on all stages of the deployment from “In Progress” to “Partially Failed” etc. You can then troubleshoot any issues should they arise.

Overall, I really like this solution. It also scales, you can add several machines using the same automation etc. From the Automation Account, you can then access the Update Management blade and manage multiple enabled VMs at once, including scheduling mutli-VM deployments of updates.

While I haven’t covered it here, this solution also works with Linux distributions and can be integrated with SCCM.

More here:

Update Management Overview

Patching Linux

Manage multiple VMs

SCCM Integration