Updated: March 2024 – repo link now fully working!

A common Azure Policy requirement for many tenants that deal with Virtual Machine infrastructure, is to restrict the sizes that are allowed to be used for deployment. This can be for several reasons, below are some of the most common:

- Cost prevention – remove high cost VM SKUs

- Alignment – align to existing SKUs for reservation flexibility

- Governance – align to a governance policy of VM family (e.g. AMD only)

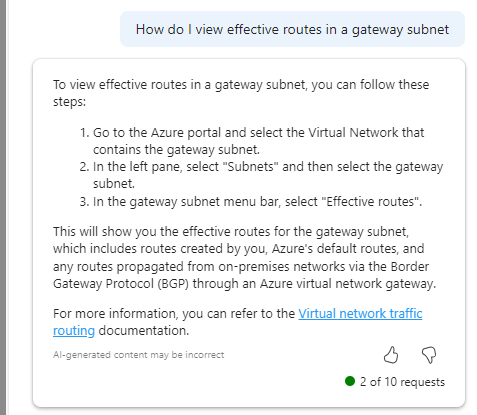

For anyone who has created a Policy like this in the past, the experience is quite straight forward. Azure provides an out-of-the-box template, you simply have to pick which VM SKUs are allowed from a drop down. However, several years ago this was straight forward. Pick X SKUs from about 100 SKUs. This list has grown exponentially, and only continues to get larger. This makes implementing the Policy, and modifying it, exceedingly cumbersome via the Portal.

So, one technical and accurate solution is to define this Policy via code. How you manage this (i.e. deployment and repo management etc) is not for this blog post, but is achievable with this as your base Policy. Two elements are required for this to work correctly

- Bicep file to deploy Policy resource

- Parameters file for VM SKUs

Item 1 is simple, I’ve included the exact code, and will link off to a repo with all of this at the end too.

//params

param vmSKUs array

param vmSKUDefinition string

//vars

var vmSKUName = 'Allowed virtual machine size SKUs'

//deploy policy assignments

resource vmAllowed 'Microsoft.Authorization/policyAssignments@2022-06-01' = {

name: 'vm-allowed'

properties: {

displayName: vmSKUName

policyDefinitionId: vmSKUDefinition

parameters: {

listOfAllowedSKUs: {

value: vmSKUs

}

}

}

}

To explain the above:

- We will pass an array of chosen VM SKUs, and the string of the built-in Policy definition.

- We use a variable to define the Policy name.

- We deploy a single resource object, combining these into one Policy deployment.

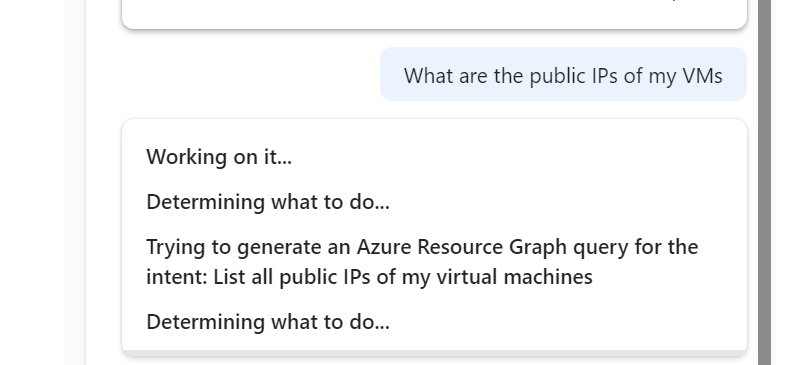

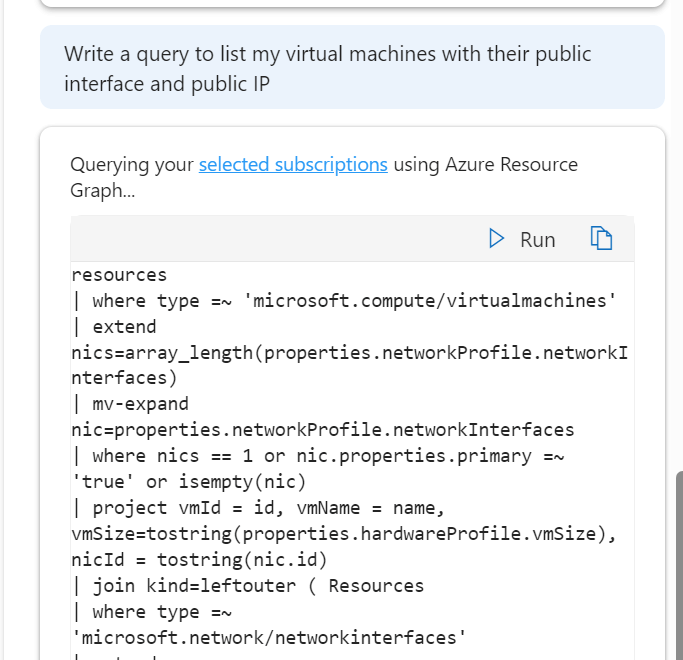

Now, to get to the point where this can be deployed, we need to define our list of chosen VM SKUs. But to get that, we need a list of possible VM SKUs, to then filter down from. There are a couple of ways to achieve this, but to avoid the misery of formatting a hundred or so lines, here is how I did it.

First, not all VM SKUs are possible in all regions, so this is our start point. Figure out the region(s) in scope for this Policy, and work from there. For this example I will use North Europe.

Get-AzVMSize -Location 'northeurope'The above gets us a big long list of VM SKUs possible in North Europe via PowerShell. However, it’s a nightmare to use and format, so a small change and an export will help…

Get-AzVMSize -Location 'northeurope' | select Name | export-csv C:\folderthatexists\vmksu.csvNow, that gives me a list of 500+ VMs, again not very manageable directly, but what is important, is that if you open that CSV file in VS Code, they are formatted exactly as you need for JSON parameters file! You can simply copy and paste lines needed. I found it quicker here to actually remove lines I don’t want than select those I do.

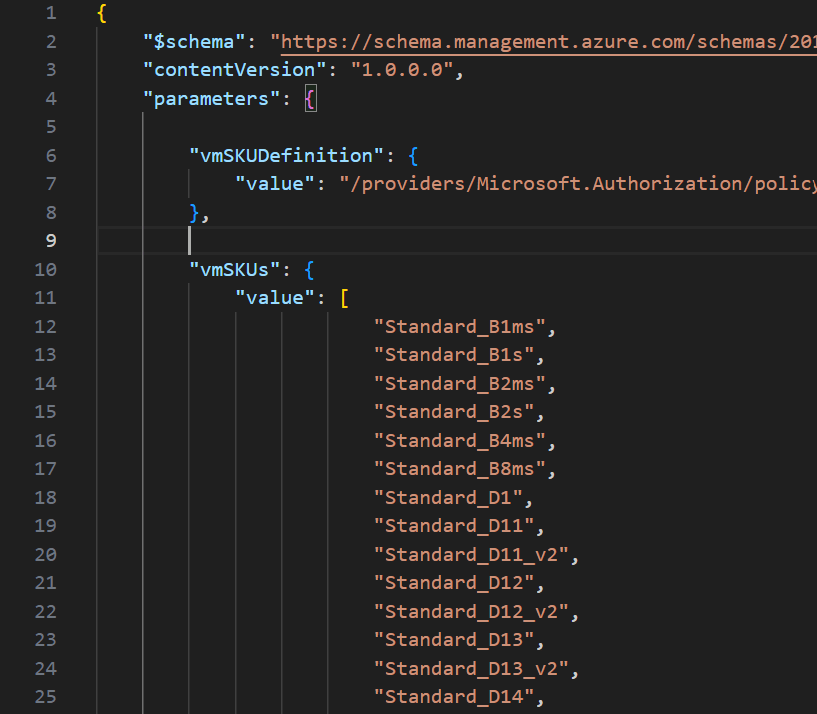

Once you have your list completed, that is now the detail we will use in the parameter file to pass our array of SKUs for deployment.

Now to deploy, simply pick your method! For this post, I am using PowerShell (as I am already logged in) and will complete a Subscription level deployment, as this is a Policy. I will pass both files as command parameters, Azure will do the rest! The below should work for you, and I will include a PS1 file in the repo too for reference, but adjust as per your files, tenant etc.

New-AzSubscriptionDeployment -Name 'az-sku-policy-deploy' -Location northeurope -TemplateFile 'C:\folderthatexists\vm-sku-policy.bicep' -TemplateParameterFile 'C:\folderthatexists\policy.parameters.json'

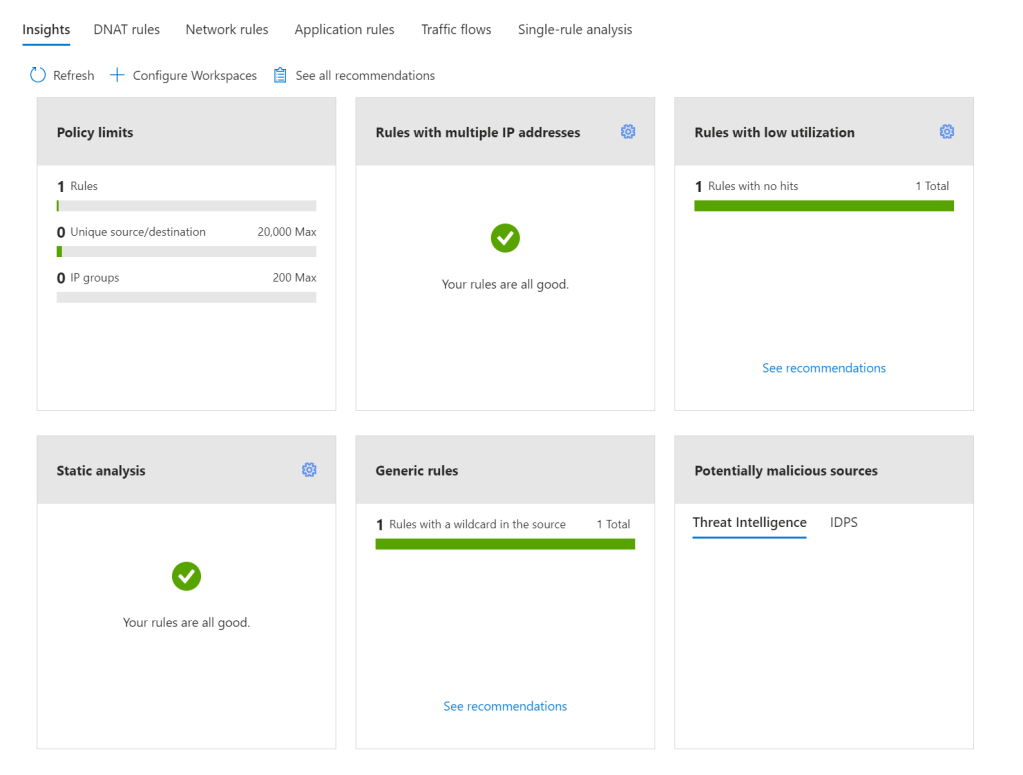

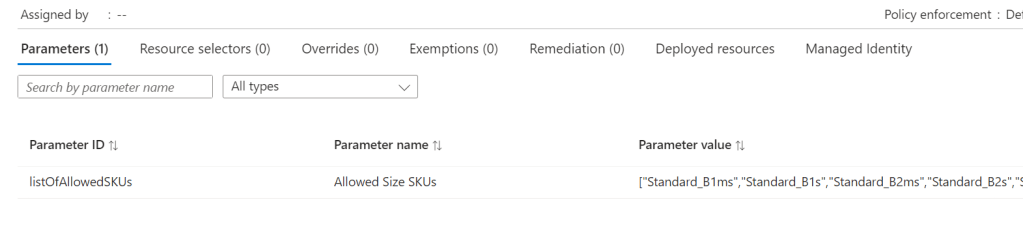

Once that runs successfully, you can verify all is correct via Portal too. Again, as this is Bicep, you can run this over and over and it will simply update the Policy if there are changes. Meaning all it requires is an update of your VM SKU parameters to add or remove VMs from being allowed.

And that’s it! As promised, here is a repo with the files for reference. Please note – as always, all code is provided for reference only and should not be used on your environment without full understanding and testing. I cannot take responsibility for your environment and use of code. Please do reach out if you have questions.